Difference between revisions of "Interaction concept"

(username removed) |

|||

| (10 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

__NOTOC__ | __NOTOC__ | ||

| − | Interaction is an important part | + | Interaction is an important part of image based medical applications, especially for manual and semi-automatic segmentation, for intervention planning, and assistance systems for medical interventions (image guided therapy or surgery). MITK has a generic interaction concept to structure and by that to standardize the manipulation of data and artificial objects in 2D and 3D views. |

| − | + | Human computer interaction (HCI) hereby combines hardware and software components to build up a bidirectional information flow. Because there are different types of hardware components or input devices (e.g. mouse, graphics tablet, tracking system, etc.), the first step to standardize the input is to define abstract events from device dependent events. The next step is to interpret the meaning of an event, which often depends on the state of the software. Even the rather simple case of building a set of points and subsequently interacting with it has multiple states (adding-a-point, point-is-selected, point-is-being-moved, etc.). The unique interaction concept of MITK is organized by mealy state machines and allows to describe and configure states and transitions in a consistent way in an XML-file (Statemachine.xml) which is loaded during run-time. Thus it is generally possible to load different interaction behavior scemes (interaction patterns) used in common medical software. Furthermore it is possible to adapt interaction behavior to specific applications and workflows without a recompile of the application. | |

| − | |||

| − | + | For further information see [http://docs.mitk.org/nightly/InteractionPage.html Doxygen generated documentation on MITK interaction] and [http://docs.mitk.org/nightly/Step10Page.html Tutorial step 10] | |

| − | + | ||

| + | [[File:Interaction_concept$MITKInteractionOverview.jpg|700px]] | ||

| + | '''Fig 1''' Overview of the interaction concept. Events from different event sources (e.g. mouse, graphics tablet, tracking system etc.) are converted into abstract events by a singleton class EventMapper. This class transmitts the events to a singleton GlobalInteraction. According to its internal state this state machine delegates the events to the dedicated interactor objects. The manipulation of the data is achieved through the creation of operation objects that are send to a specific data object. Here the operation is executed which changes the data accordingly. Additionally, the operation and its associated inverse operation (undo-operation) are send to the UndoController, which stores the operations according to the undo-model. In case of an undo, the inverse operation is send to the data and restores the previous status. | ||

Latest revision as of 16:19, 1 June 2016

Interaction is an important part of image based medical applications, especially for manual and semi-automatic segmentation, for intervention planning, and assistance systems for medical interventions (image guided therapy or surgery). MITK has a generic interaction concept to structure and by that to standardize the manipulation of data and artificial objects in 2D and 3D views.

Human computer interaction (HCI) hereby combines hardware and software components to build up a bidirectional information flow. Because there are different types of hardware components or input devices (e.g. mouse, graphics tablet, tracking system, etc.), the first step to standardize the input is to define abstract events from device dependent events. The next step is to interpret the meaning of an event, which often depends on the state of the software. Even the rather simple case of building a set of points and subsequently interacting with it has multiple states (adding-a-point, point-is-selected, point-is-being-moved, etc.). The unique interaction concept of MITK is organized by mealy state machines and allows to describe and configure states and transitions in a consistent way in an XML-file (Statemachine.xml) which is loaded during run-time. Thus it is generally possible to load different interaction behavior scemes (interaction patterns) used in common medical software. Furthermore it is possible to adapt interaction behavior to specific applications and workflows without a recompile of the application.

For further information see Doxygen generated documentation on MITK interaction and Tutorial step 10

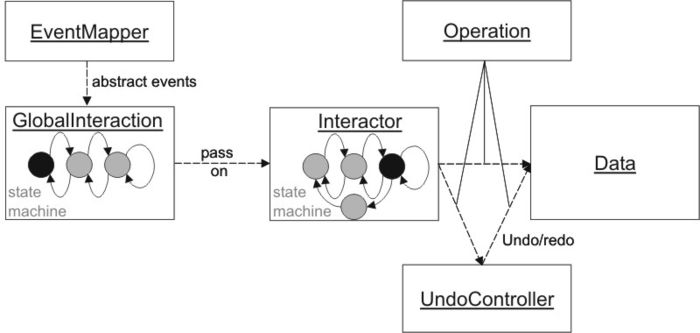

Fig 1 Overview of the interaction concept. Events from different event sources (e.g. mouse, graphics tablet, tracking system etc.) are converted into abstract events by a singleton class EventMapper. This class transmitts the events to a singleton GlobalInteraction. According to its internal state this state machine delegates the events to the dedicated interactor objects. The manipulation of the data is achieved through the creation of operation objects that are send to a specific data object. Here the operation is executed which changes the data accordingly. Additionally, the operation and its associated inverse operation (undo-operation) are send to the UndoController, which stores the operations according to the undo-model. In case of an undo, the inverse operation is send to the data and restores the previous status.

Fig 1 Overview of the interaction concept. Events from different event sources (e.g. mouse, graphics tablet, tracking system etc.) are converted into abstract events by a singleton class EventMapper. This class transmitts the events to a singleton GlobalInteraction. According to its internal state this state machine delegates the events to the dedicated interactor objects. The manipulation of the data is achieved through the creation of operation objects that are send to a specific data object. Here the operation is executed which changes the data accordingly. Additionally, the operation and its associated inverse operation (undo-operation) are send to the UndoController, which stores the operations according to the undo-model. In case of an undo, the inverse operation is send to the data and restores the previous status.